Table 1. General practice colleges standards for supervisors

|

|

Australian College of Rural and Remote Medicine1

|

|---|

|

7.2.7 The supervisor must conduct formative assessment of the registrar, in accordance with their stage of training. In the first 12 months of training, the supervisor agrees to undertake regular reviews (at least once every 4 months) of registrar patient consultations. This may be achieved by sitting in on patient consultations or through reviewing videotaped/audio-taped consultations supplied by the registrar. The supervisor will use this exercise to provide the registrar with feedback on their performance and to guide the registrar in self-evaluation.

Note: registrars are required to submit six consultations assessed using the miniCEX

7.3.2 The supervisor must be skilled in assessing and providing feedback on performance, including establishing and reviewing learning plans. It is very important that positive and negative feedback are given appropriately and in a timely fashion.Feedback is best when it is based on first hand observation and when it is constructive in nature. It should be given as soon as possible when the opportunity occurs in a learning situation. Waiting until mid-term or end of placement to give feedback about deficiencies is potentially dangerous for patients and provides the registrar with little opportunity to improve.

|

|

Royal Australian College of General Practitioners2

|

|---|

|

Standard 1.1 – Supervision is matched to the individual registrar’s level of competence and learning needs in the context of their training post.

Criterion 1.1.1.1 The registrar’s competence is assessed prior to entry to the post and monitored throughout the term and training.

Standard 1.2 – A model of supervision is developed that in the context of the training post ensures quality training for the registrar and safety for patients.

There is a documented process by which the supervisor conducts and records the assessment activities and other means of determining a registrar’s competencies during their time in the placement. The process is approved by the training provider and regular reporting and feedback between training provider and supervisor is established. P6

|

|

1Reproduced with permission from the Australian College of Remote and Rural Medicine, from Primary rural and remote training: standards for supervisors and teaching posts. Brisbane: ACRRM, 2013.

2Reproduced with permission from the Royal Australian College of General Practitioners, from Vocational Training Standards. East Melbourne: RACGP, 2013

|

|---|

Why assess?

Patient safety and registrars at risk

Early assessment1,2 helps supervisors gauge how much direct clinical oversight registrars need.3 The privacy of general practice consulting rooms can delay supervisors’ realisations that new registrars are struggling.4 Relying on registrars’ insight to know when to ask questions can be flawed, particularly for poor performers5 who tend to overestimate their ability.6,7

Assessment facilitates early educational intervention

Educational organisations, such as regional training providers, rely on supervisors to assess registrars’ progress through training. A minority of registrars need extra educational support. Early identification of these doctors facilitates early intervention,2 which may lead to successful remediation. Very rarely, registrars may need to be removed from the training program and counselled to consider an alternative career.

Supervisors facilitating learning

There is a strong case that supervisors’ tasks of facilitating registrars’ learning will be augmented by supervisors undertaking an assessment of registrars’ performance.

It is recognised that assessment drives learning.8 Assessment informs feedback, which registrars expect supervisors to be skilled in giving.9,10 Supervisors’ feedback is valued by registrars11 and has credibility because of their currency in the same job,12 and their longitudinal observations of registrars at work.13

Challenges for supervisors as assessors

|

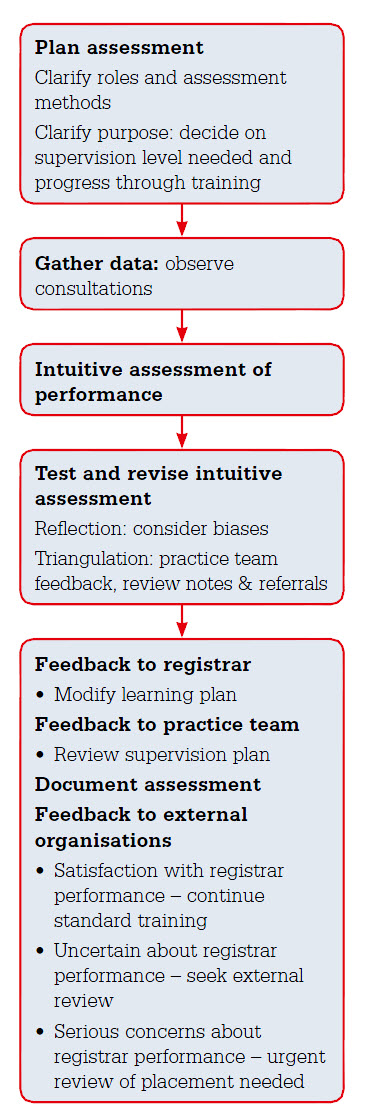

| Figure 1. Framework for in-practice assessment of registrars |

Supervisors who assess registrars may find it harder to also support them, and registrars’ willingness to be open about their vulnerabilities14 may be reduced. Assessment of registrars by their supervisors can exaggerate the power imbalance between them. This is particularly an issue when supervisors are also registrars’ employers, and/or visa sponsors, or when registrars provide valuable workforce to the practice. Time taken assessing registrars can also be costly.

Supervisors may doubt the accuracy of their assessment and opinion15 and may avoid expressing concerns about registrars’ performance, hoping that they will improve over time. This is particularly relevant if registrars have switched to practise in a different context, given the heterogeneity of general practices in Australia. For example, the content and culture of medical practice varies between Aboriginal Community Controlled Health Services and mainstream general practice. It can be difficult to distinguish between performance that will improve once registrars have adjusted to new contexts, and performance that indicates educational problems that are unlikely to resolve with experience. Supervisors have requested training in assessment,16 which the following guide based on practical experience and research evidence aims to provide (Figure 1).

Guide for supervisors in assessing registrars

1. Plan assessments

Clarify roles

General practice supervisors have multiple roles.17 A brief explanation to registrars of their assessment role can create clarity.18 Ideally, registrars should be advised at the beginning of their term how and when they will be assessed.

Clarify purpose

Traditionally, the purpose of assessment has been considered formative or summative. Formative assessment aims to improve teaching and learning, and summative assessment determines learners’ progress and and can be used for certification of this progress. Recently, this divide has been bridged by the notion that all assessment should promote learning.19 The two main considerations for supervisors are whether registrars are performing safely in the context of their current level of supervision and whether registrars are performing at the expected level of their training.

Clarify assessment methods and their limitations

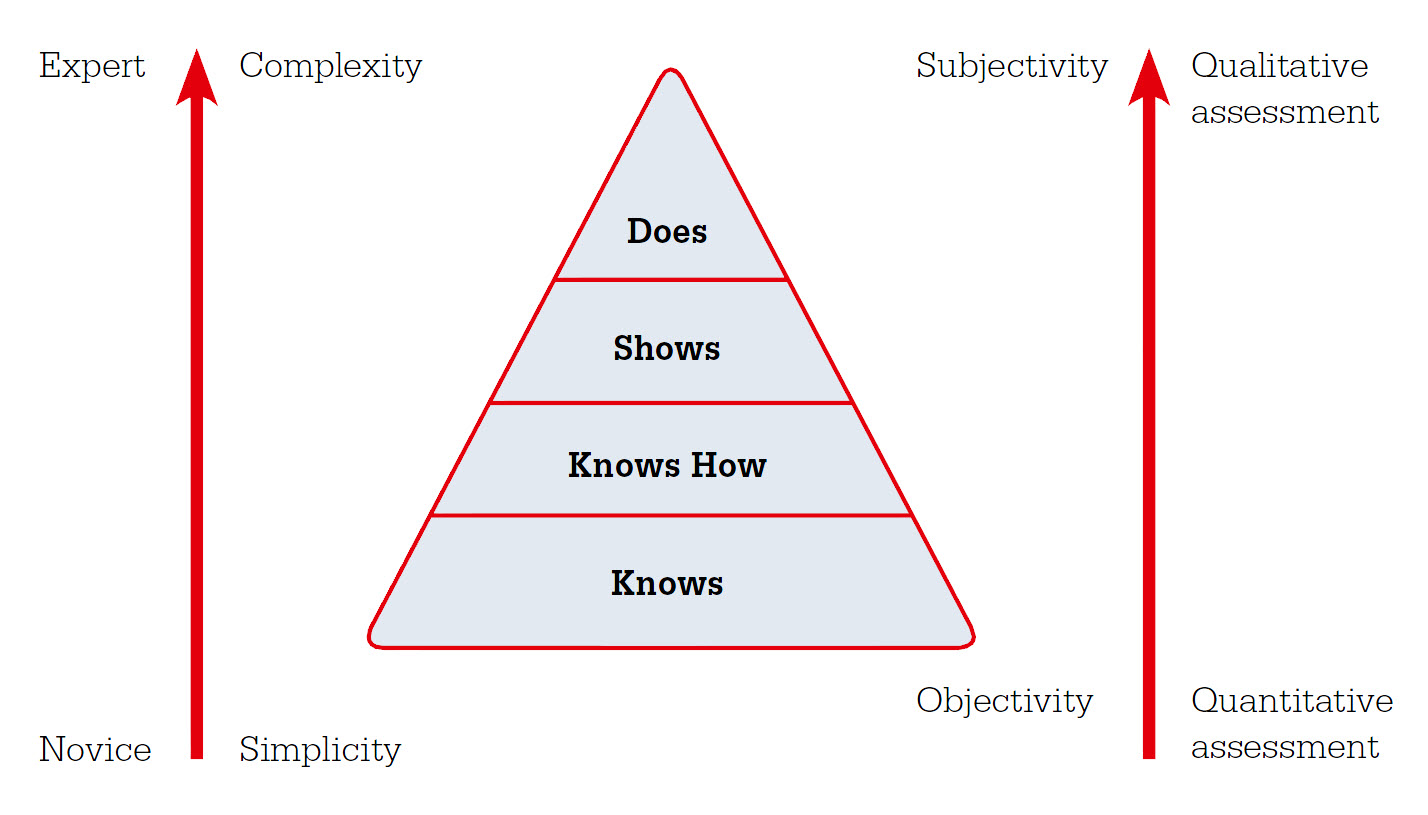

The choice of assessment method depends on what is being assessed and the purpose of the assessment, as all methods have advantages and disadvantages.20 Miller differentiated the assessment of clinical skills into competency assessment and performance assessment.21 Competency assessment evaluates what doctors do in controlled representations of professional practice and performance assessment evaluates what they actually do in practice.

Competency assessment has its basis in behaviourism22 and works successfully in the vocational education and training sector.23 Each skill is broken down into its components and checklists are created for assessors to complete when learners demonstrate competence in each component. However, competency at individual aspects of practice does not prove that doctors are able to perform skilfully at work. Good doctors are more than a sum of individual competencies, and focus on competency alone risks missing more important but less quantifiable elements of practice such as wisdom and clinical judgment.22 The Australian Medical Council proposed that ‘the coarse-grained concept of competent professional practice, where observed performance is more than the sum of the set of competencies used, should be retained’.23 As yet there is no dependable set of competency-based assessment tools for clinical practice.24

Professional performance is most appropriately judged by work-based assessment.25 For registrars this means observing their work in clinical practice (Figure 2). However, implementing work-based assessment has proved challenging and has been criticised for being unpredictable, unstandardised and biased.21,26 This is in part because experienced practitioners tend to make automatic intuitive judgments rather than engaging in analytic processes to reach judgments.27 Although such intuitive judgments are often more accurate,28 they are inherently subject to a range of biases29 and may overlook key data,27 resulting in judgment error. This can be problematic for ranking registrars on the basis of supervisors assessments21 or for assessments that lead to being granted the privilege of independent practice.30

|

| Figure 2. Choosing methods to assess clinical practice21,22,25,34 |

Qualitative researchers face similar challenges in assessing the trustworthiness of their assertions and have tools that can be applied to these qualitative, intuitive, work-based assessments.25 These tools are prolonged engagement, triangulation, negative case analysis, reflexivity, peer debriefing, member-checking and audit trails (Table 2). The sources of error in work-based assessments can be addressed as below29 by applying these tools of analytical rigour31,32 to reconsider initial, intuitive judgments.

Table 2. Qualitative research tools applied to registrar assessment

|

|

Tool

|

Description

|

Parallel in registrar assessment

|

|---|

|

Prolonged engagement

|

Engagement of researchers over an extended period of time with the phenomenon of interest

|

Assessment of registrars over an extended period

|

|

Triangulation

|

Collecting data from multiple sources

|

Using several methods of assessing registrars’ performance

|

|

Reflexivity

|

Critiquing the impact of the investigator on the data and the conclusions made

|

Supervisors examine their own biases and impact on registrars’ performance and assessment

|

|

Peer debriefing

|

Discussion of the data and its interpretation with other researchers

|

Discussion of registrars assessment with other members of the practice team

|

|

Negative case analysis

|

Seeking disconfirming evidence

|

Actively seeking evidence that refutes the assessment of registrars

|

|

Member checks

|

Checking the data and conclusions with the subjects of the investigation

|

Checking with registrars the supervisors’ observations and conclusions on their performance

|

|

Audit trail

|

Documenting the data collection, the methodology, decisions and rationale.

|

Documenting the means of assessing registrars, and the ways of validating the assessment

|

2. Collect the data – observe consultations

Most information comes from observing registrars consult directly or via video recordings. Other options are random case note reviews,33 audits of investigation ordering4 and arranging consultations where registrars present their diagnosis and management to supervisors before then concluding consultations. Watching for the red flags of registrars who are unconsciously incompetent, such as issues of punctuality, poor communication, defensive justifications and inability to change behaviour in the light of feedback is also recommended.4

3. Make an initial judgment on performance

Next, supervisors need to make working diagnoses of registrars’ performances. This is an intuitive, overall assessment of the standard of registrars’ clinical practice.

4. Test the initial judgment using qualitative research methods

Using reflexivity to reduce bias

For supervisors, this means first reflecting on how current stresses and personal biases are likely to affect their judgments and whether the initial judgment should be revised.

A range of sources of bias can contribute to erroneous intuitive judgments.34 Some assessors are naturally stringent (hawks) or lenient (doves). Case-related bias (the hobby-horse effect) arises when the subject of the assessment activity is a supervisor’s specific interest, which results in their having higher expectations of performance. Recent ‘near-miss’ experiences by supervisors may similarly alter their performance expectations for particular presentations. Subject-related bias (the halo effect)35 occurs when learners perform one aspect of a consultation particularly well, which leads the supervisor to assess the remainder of the performance highly; the converse can also occur. Using a structured form, such as the mini-CEX, can ensure all domains and aspects of the consultation are considered. Social and cultural biases are important to recognise, as registrars towards whom supervisors have a natural affinity are more likely to be regarded positively.

Triangulation

Triangulation of the data can be achieved by using multiple methods of observing registrars’ performance (see 2 above). This can also be against non-work-based competency assessments such as knowledge tests and role playing.

Prolonged engagement

Programmatic assessment enacts prolonged engagement by using a combination of assessment instruments, each of which is used to assess multiple facets of learning at intervals during training.36 This is more commonly arranged by educational organisations than by individual supervisors or their practices.

Negative case analysis

Negative case analysis is a deliberate effort to reveal key evidence that refutes the initial judgment, which may have been overlooked. For example, is a registrar whose practice was good on observation over-investigating or not getting repeat bookings from patients?

Peer debriefing

Peer debriefing would normally be achieved by discussing the assessment of the registrar with other practice or supervisory team members.

Audit trail

Documenting assessments creates an audit trail for supervisors, registrars or educators to review decisions if needed. This is usually in writing but a brief voice memo made immediately after observing registrars may be quicker, and can be transcribed later.

5. Action – feedback to the registrar and practice team

Supervisors then give feedback to registrars about their perceived strengths and weaknesses. Registrars can use this information to plan their future learning and supervisors can modify the practice supervision plan if needed.

6. Action – feedback to external organisations

The external organisation responsible for overseeing the whole of registrars’ training needs to know the outcome of supervisors’ assessments. Adequate performance permits progress through training and continuation of current supervision levels. For a minority of registrars, supervisors will judge their performance as below expectations. These judgments require adjustment to supervision levels to ensure patient safety and the educational organisation to arrange further registrar assessments and determine what, if any, remedial action is indicated.30 If assessment reveals consistent problems and patient safety is threatened, supervisors may need to seek further external assessors, significantly reduce, or rarely, even remove registrars’ rights to practise.

Summary

General practice supervisors have roles in registrar assessment both to promote patient safety and registrar learning. Work-based assessment of performance seems to be the most accurate and feasible way to assess registrars’ day-to-day work, but is prone to error. An assessment framework, with explicit processes that aim to minimise bias and errors, is recommended. General practice supervisors’ assessments can produce working diagnoses of registrars’ performances, which guide the level of support and clinical oversight needed, and flag registrars that require further assessment by educational organisations for remediation decisions.

Acknowledgements

We would like to thank Dr Hamshi Singh, Registrar Research and Development Officer at General Practice Education and Training for her insightful comments on earlier drafts of this article, and Dr Tim Clements and Dr Patrick Kinsella from Southern GP Training, who collaborated with us at workshops that started our discussions about supervisors as assessors.

Competing interests:

Southern GP Training paid Susan Wearne to assist with a workshop for supervisors on this topic. The views expressed in this article are those of the authors and not necessarily those of their respective employers. Southern GP Training is undertaking a research project ‘Ad hoc supervisory encounters between GP-supervisors and GP-registrars: Enhancing quality and effectiveness.’

This was funded by a GPET Education Integration research grant.

Provenance and peer review: Not commissioned, externally peer reviewed.